In our third installment of Thought Leaders, meet a faculty member who joined Cal Poly through its universitywide cluster hire program. This program welcomes educators in a variety of fields who illuminate the intersection of diversity and inclusion in their field of study through teaching and scholarship.

Deb Donig, assistant professor in the English Department, focuses her scholarship on the busying intersection between technology and ethics through an unexpected lens: literature. She says the seeds for what people dream up — and then dare to create — are planted in the narratives we consume and recycle.

“How we imagine things depends on the stories that we’re told. In fact, some of the most significant pieces of technology were imagined in things like science fiction,” Donig says. “The inroad into thinking about how we imagine — and then thinking about how we can humanistically reimagine — is by looking at the terrain of the imagination.”

Donig cofounded the Ethical Technology Project at Cal Poly, alongside Professor Matthew Harsh, and with faculty from across campus committed to developing an approach to innovation grounded in humanistic principles and values. Outside of the classroom, she hosts the “Technically Human” podcast, where she discusses what it means to be human in our modern, tech-focused world. Her conversations with industry leaders dive deep into the nuances of topics like surveillance technology, humane design in fashion and civil discourse on racism.

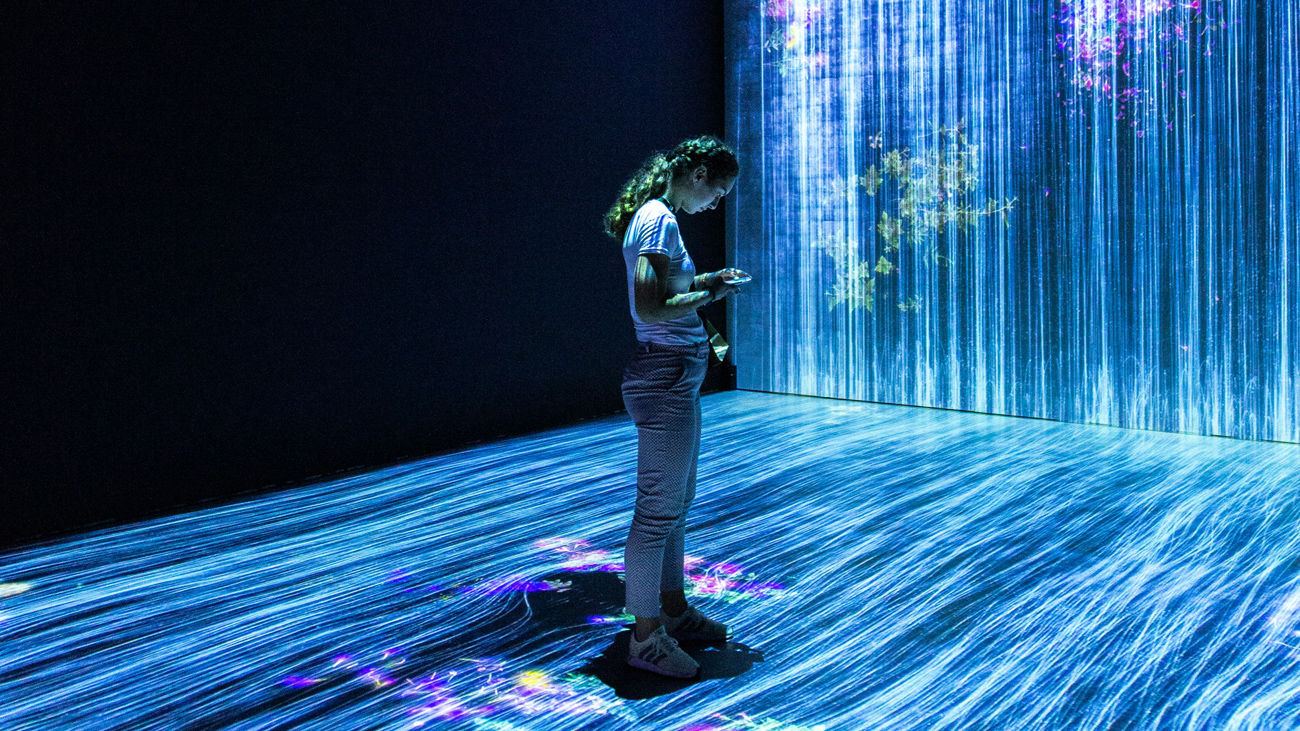

Last year, she began teaching an English course, also titled “Technically Human,” which examines the relationship between fiction writing and technological advances. Students zero in on themes of goodness and humanity in relationship to technology to spot trends and major shifts in a culture’s collective imagination. Using a blend of technical and humanities skills, Donig shows future professionals how they can counter the inertia of tech-driven inequity while helping them develop career skills that will be valuable in tomorrow’s job market.

Donig was inspired to join the movement toward more ethical technology after studying the striking inequities and unintended — or blatantly neglected — consequences brought on by the culture of tech production. She’s also studying the academic trajectories that not only propel the tech workforce, but continue to perpetuate those consequences.

“Everything we build has built within it the passions, blind spots and biases of the people who build them,” she says. “The culture of technology orbits around, perpetuates, then ossifies and, in many ways, expands these inequities and these biases.”

When Donig introduces her students to the ways technology perpetuates unintended consequences, she often points the lack of women in commercial aviation. Some people explain it by pointing to the Air Force’s career paths and time away from family, but that’s not the only factor. Airplane cockpits are physically designed to fit the average man, helping men be more agile and confident while navigating the controls, and leaving out women and other people with smaller statures.

The future of tech work is not going to primarily ask, ‘Can we build this?’ It will ask, ‘Should we build this?'

That’s just one example of inherited bias in technology, but others include photography technology that favors lighter skin tones and car seat belts that protect men’s bodies better than women’s or children’s. On the other hand, computer technology built to be more inclusive for people with disabilities, liked closed captioning and alt text, has enhanced the digital experience for a wide cross-section of users.

On the bright side, Donig has noticed an emerging career path in ethical technology as major companies and technological innovators are hiring chief ethics officers, assessment managers and “white hat” hackers to develop and measure the impact of leading-edge products, services and tools. She’s teamed up with other professors and student researchers to analyze these job postings, with the aim of better positioning Cal Poly as a leader in ethical tech research and training. The project has become one of the university’s Strategic Research Initiatives on the future of the tech workforce.

“I have a hypothesis: the future of tech work is not going to primarily ask, ‘Can we build this?’ It will ask, ‘Should we build this?’” she observes. “We’re asking, in a sense, ‘How are our capabilities aligned with our values?’”

Donig is eager to keep driving the conversation in every arena she enters knowing the potential for our stories, our aspirations, and our creations to shape how people live their lives.

“When we design technology equitably and ethically, I think we make the world better for everybody,” she says. “There is an ethical mandate there, but I think that there is a value proposition there too: solving problems for large groups of people, particularly people who have been ignored. Hopefully bringing our culture back to that will help us build a world of technologies that better reflect our human values.”